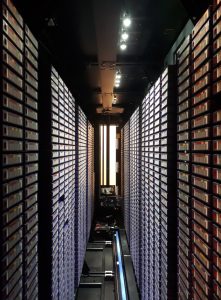

Rouge AMD GPU Cluster

The Rouge cluster was donated to the University of Toronto by AMD as part of their COVID-19 HPC Fund support program. The cluster consists of 20 x86_64 nodes each with a single AMD EPYC 7642 48-Core CPU running at 2.3GHz with 512GB of RAM and 8 Radeon Instinct MI50 GPUs per node.

The nodes are interconnected with 2xHDR100 Infiniband for internode communications and disk I/O to the SciNet Niagara filesystems. In total this cluster contains 960 CPU cores and 160 GPUs.

The user experience on Rouge is similar to that on Niagara and Mist, in that it uses the same scheduler and software module framework.

The cluster was named after the Rouge River that runs through the eastern part of Toronto and surrounding cities.

The system is currently its beta testing phase. Existing Niagara and Mist users affiliated with the University of Toronto can request early access by writing to support@scinet.utoronto.ca

In tandem with this SciNet hosted system, AMD, in collaboration with Penguin Computing, has also given access to a cloud system of the same architecture.

Specifics of the cluster:

- Rouge consists of 20 nodes.

- Each node has a 48-core AMD EPYC7642 CPU, 2-way hyperthreaded, and 8 AMD Radeon Instinct MI50 GPUs.

- There is 512 GB of RAM per node.

- HDR Infiniband one-to-one network between nodes.

- Shares file systems with the Niagara cluster (parallel filesystem: IBM Spectrum Scale, formerly known as GPFS).

- No local disks.

- Theoretical peak performance (“Rpeak”) of 1.6 PF (double precision), 3.2 PF (single precision).

- Technical documentation can be found at SciNet’s documentation wiki.